I spent some time hacking on Doom 3 adding support for EGL and OpenGL ES2.0; obviously there is still a significant amount of work to be completed before this looks even remotely like the game. Some of you might make out the text console in this image, rendered with the incorrect shader. :-) Phoronix, go nuts... :-)

(Updated again 2012-05-07: Doom 3 is nothing without stencil shadow volumes!)

(Updated 2012-05-07: I discovered and fixed a little bug in the modelViewProjection matrix...)

Saturday, April 28, 2012

Wednesday, April 25, 2012

Google Calendar Bug-Fixes

I have discovered some bugs in my previous entry regarding Google Calendar synchronization. Firstly there is a bug in the locking mechanism causing it to sometimes hang while running fetchmail. The following is the corrected .procmailrc entries:

I discovered the second bug when synchronizing a particular iCalender format file containing several BEGIN:VEVENT blocks. Events are expected to have a UID field. I do not know whether this should be unique within the scope of your entire calendar, but it must be unique within the scope BEGIN:VCALENDAR block (i.e. the entire iCalender file.)

Exchange, in all its infinite stupidity decides to assign the same UID to every VEVENT, and as soon as you attempt to upload it to Google Calender via WebDav you will receive a cryptic error message:

500 Server error code: ........ (Pseudo-random characters)

You'll receive an even less helpful error message when trying the "Import Calendar" feature of the web interface:

Failed to import events: Could not upload your events because you do not have sufficient access on the target calendar.

What is the solution? Well, funnily enough a Unique Identifier (UID) should actually be unique! Changing those UIDs to some unique values and uploading the file again works perfectly! (Anything works, I used echo $RANDOM | md5sum | cut -d ' ' -f 1 i.e. MD5 hash of a pseudo-random number.)

I am only aware of one such formatted calendar entry in my dataset, so I adjusted it manually. However, I'd feel safer using sed or awk to replace each "UID" with a real unique identifier. I'll post such a modified calendar.sh in the second part, whenever I feel like playing with awk again! You could always beat me to it... Happy hacking!

#

# === Google Calendar (from Microsoft Exchange; iCalendar) ===

#

:0 c:

* H ?? ^Content-Type: multipart/(alternative|mixed)

* B ?? ^Content-Type: text/calendar

{

:0 Wac: Mail/multipart.lock

| munpack -q -t -C ~/Mail/.munpack 2> /dev/null

:0 Wac: Mail/multipart.lock

| /home/oliver/bin/calendar.sh

:0 Wac: Mail/multipart.lock

| rm -f ~/Mail/.munpack/*

}

I discovered the second bug when synchronizing a particular iCalender format file containing several BEGIN:VEVENT blocks. Events are expected to have a UID field. I do not know whether this should be unique within the scope of your entire calendar, but it must be unique within the scope BEGIN:VCALENDAR block (i.e. the entire iCalender file.)

Exchange, in all its infinite stupidity decides to assign the same UID to every VEVENT, and as soon as you attempt to upload it to Google Calender via WebDav you will receive a cryptic error message:

500 Server error code: ........ (Pseudo-random characters)

You'll receive an even less helpful error message when trying the "Import Calendar" feature of the web interface:

Failed to import events: Could not upload your events because you do not have sufficient access on the target calendar.

What is the solution? Well, funnily enough a Unique Identifier (UID) should actually be unique! Changing those UIDs to some unique values and uploading the file again works perfectly! (Anything works, I used echo $RANDOM | md5sum | cut -d ' ' -f 1 i.e. MD5 hash of a pseudo-random number.)

I am only aware of one such formatted calendar entry in my dataset, so I adjusted it manually. However, I'd feel safer using sed or awk to replace each "UID" with a real unique identifier. I'll post such a modified calendar.sh in the second part, whenever I feel like playing with awk again! You could always beat me to it... Happy hacking!

Sunday, April 22, 2012

Doom 3 GLSL

I recently implemented a GLSL renderer backend for Doom 3. Yes, there are already a couple of backends existing (e.g. raynorpat's) unfortunately these did not run successfully on my hardware and had serious rendering and pixel errors.

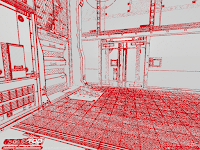

These images are from the first implementation of my backend, where I had accidentally called normalize() on a vector which was almost normalized. The result is pixel-imperfection when compared to the standard ARB2 backend, and the cost of pointless normalization in the fragment shader.

You can also see the importance of running a comparison or image-diff program when implementing a new backend. Can you see the differences between the first two images immediately, with the naked eye? I couldn't.

Finally, here is the backend running the hellhole level. The black regions are areas that would be rendered by the (currently unimplemented in GLSL) heatHaze shader. Not bad for an i965 GPU.

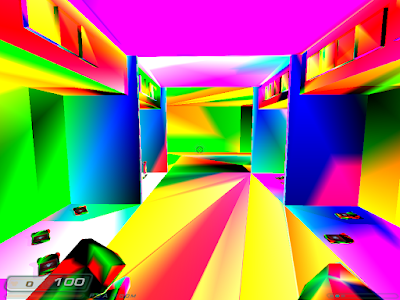

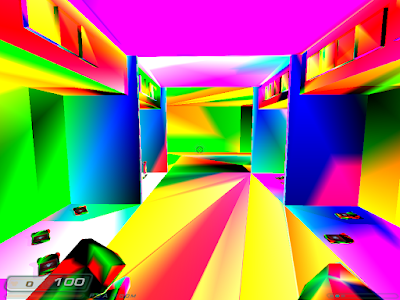

Just for the laughs, here is what happens when Doom 3 decides to try LSD; or fails to pass initialized texture coordinates from the vertex program to the fragment program in the ARB2 backend.

These images are from the first implementation of my backend, where I had accidentally called normalize() on a vector which was almost normalized. The result is pixel-imperfection when compared to the standard ARB2 backend, and the cost of pointless normalization in the fragment shader.

You can also see the importance of running a comparison or image-diff program when implementing a new backend. Can you see the differences between the first two images immediately, with the naked eye? I couldn't.

Finally, here is the backend running the hellhole level. The black regions are areas that would be rendered by the (currently unimplemented in GLSL) heatHaze shader. Not bad for an i965 GPU.

Just for the laughs, here is what happens when Doom 3 decides to try LSD; or fails to pass initialized texture coordinates from the vertex program to the fragment program in the ARB2 backend.

Labels:

Bug,

Development,

Doom 3,

GLSL,

OpenGL

Friday, April 20, 2012

From fetchmail/procmail to Google Calendar

I spent many hours scouring Google before finally figuring out how to implement this easily. Calendar synchronization is very important to me; I want to be able to look at my phone, my tablet, or my browser and see my entire schedule (otherwise I'd never show up anywhere.)

.procmailrc:

W means wait until the program finishes and ignore any failure messages. a means the preceding recipe must have successfully completed before procmail will execute this recipe. Finally, c means carbon-copy (this recipe does not handle delivery of the mail, so it must proceed further through the chain of recipes.)

It may be more appropriation to use f (this pipe is a filter) instead. I am new to procmail configuration; c works for me.

First match messages containing a Content-Type header of either multipart/alternative or multipart/mixed, second check the message body for a Content-Type of text/calendar (which is the data we want.) If these conditions are true unpack the MIME multipart message into separate files with munpack into a temporary directory. Proceed and run calendar.sh which does the magic, and finally clean up after ourselves.

calendar.sh:

I use expect and cadaver to upload the iCalender file extracted by munpack to Google Calendar via their WebDav interface. Finding the CALENDAR_ID is a little confusing. You can find it from the "Settings" option when you login to the Google Calender via a browser. You must use the public Calendar Address, not the Private Address, but you do not need to share the calender publicly; Google will request your login details via WebDav. This is easiest to configure in .netrc. chmod 600 the file for some additional safety.

.procmailrc:

:0 Wc: Mail/multipart.lock

* H ?? ^Content-Type: multipart/(alternative|mixed)

* B ?? ^Content-Type: text/calendar

{

:0 Wac:

| munpack -q -t -C ~/Mail/.munpack 2> /dev/null

:0 Wac:

| /home/oliver/bin/calendar.sh

:0 Wc:

| rm -f ~/Mail/.munpack/*

}

This beautiful undocumented recipe is actually quite simple, but perhaps needs some explanation. From man procmailrc:A line starting with ':' marks the beginning of a recipe. It has the following format: :0 [flags] [ : [locallockfile] ]

zero or more conditions (one per line)exactly one action line

W means wait until the program finishes and ignore any failure messages. a means the preceding recipe must have successfully completed before procmail will execute this recipe. Finally, c means carbon-copy (this recipe does not handle delivery of the mail, so it must proceed further through the chain of recipes.)

It may be more appropriation to use f (this pipe is a filter) instead. I am new to procmail configuration; c works for me.

First match messages containing a Content-Type header of either multipart/alternative or multipart/mixed, second check the message body for a Content-Type of text/calendar (which is the data we want.) If these conditions are true unpack the MIME multipart message into separate files with munpack into a temporary directory. Proceed and run calendar.sh which does the magic, and finally clean up after ourselves.

calendar.sh:

#!/bin/bash

http_proxy=http://proxy.example.com:8080

CALENDAR_ID='XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX@group.calendar.google.com'

for i in ~/Mail/.munpack/*; do

if [ -n "$(grep 'BEGIN:VCALENDAR' ${i} 2> /dev/null)" ]; then

expect -dc \

"spawn /usr/bin/cadaver -p ${http_proxy/http:\/\/} \

https://www.google.com/calendar/dav/${CALENDAR_ID}/events ; \

expect \"dav:\" ; \

send \"put ${i}\\r\" ; \

expect \"dav:\" ;

send \"bye\\r\""

fi

done

Newlines have been inserted into the script so that it doesn't break layouts on other pages; you will need to fix those if copying this script.I use expect and cadaver to upload the iCalender file extracted by munpack to Google Calendar via their WebDav interface. Finding the CALENDAR_ID is a little confusing. You can find it from the "Settings" option when you login to the Google Calender via a browser. You must use the public Calendar Address, not the Private Address, but you do not need to share the calender publicly; Google will request your login details via WebDav. This is easiest to configure in .netrc. chmod 600 the file for some additional safety.

The login should not include the @gmail.com otherwise it will fail. Happy hacking!machine www.google.com login john.doe password example123

Fun with UTF-8

I finally decided to configure my computers for UTF-8, mostly due to the frustration of attempting to determine which Finnish character should have been displayed (and the probable annoyance of Finns having their names mangled by my mail client.)

The fun part was figuring out why my xterm, specified in .ratpoisonrc as bind c exec xterm -u8 did not launch a UTF-8 terminal. I then realized that Ratpoison was launching xterm from a bare-metal shell and the LANG environment variable was never set; quickly changing the binding to bind c exec xterm +lc -u8 (switch off automatic selection of encoding and respect my -u8 option) resolved the final issue.

Now I can happily read the ä's, ö's, and Å's from a language I do not understand (excluding a few keywords and phrases.) Cool!

- /etc/locale.gen is useful for generating only the locales you actually need. I selected English (US and UK), Finnish, and Swedish as UTF-8, ISO-8859-1, and ISO-8859-15 (where appropriate.)

- .bashrc: export LANG=en_US.UTF-8

- Setup .Xdefaults:

Actually the UTF-8 options are not required due to the way I am launching terminals from my window manager, but it does not cause any damage. Black terminal background is mandatory!XTerm*Background: Black XTerm*Foreground: White XTerm*UTF8: 2 XTerm*utf8Fonts: 2

The fun part was figuring out why my xterm, specified in .ratpoisonrc as bind c exec xterm -u8 did not launch a UTF-8 terminal. I then realized that Ratpoison was launching xterm from a bare-metal shell and the LANG environment variable was never set; quickly changing the binding to bind c exec xterm +lc -u8 (switch off automatic selection of encoding and respect my -u8 option) resolved the final issue.

Now I can happily read the ä's, ö's, and Å's from a language I do not understand (excluding a few keywords and phrases.) Cool!

Subscribe to:

Comments (Atom)